‘The Wrong Answer Here is Just Avoiding It:’ ODU Educators Weigh in On AI in the Classroom

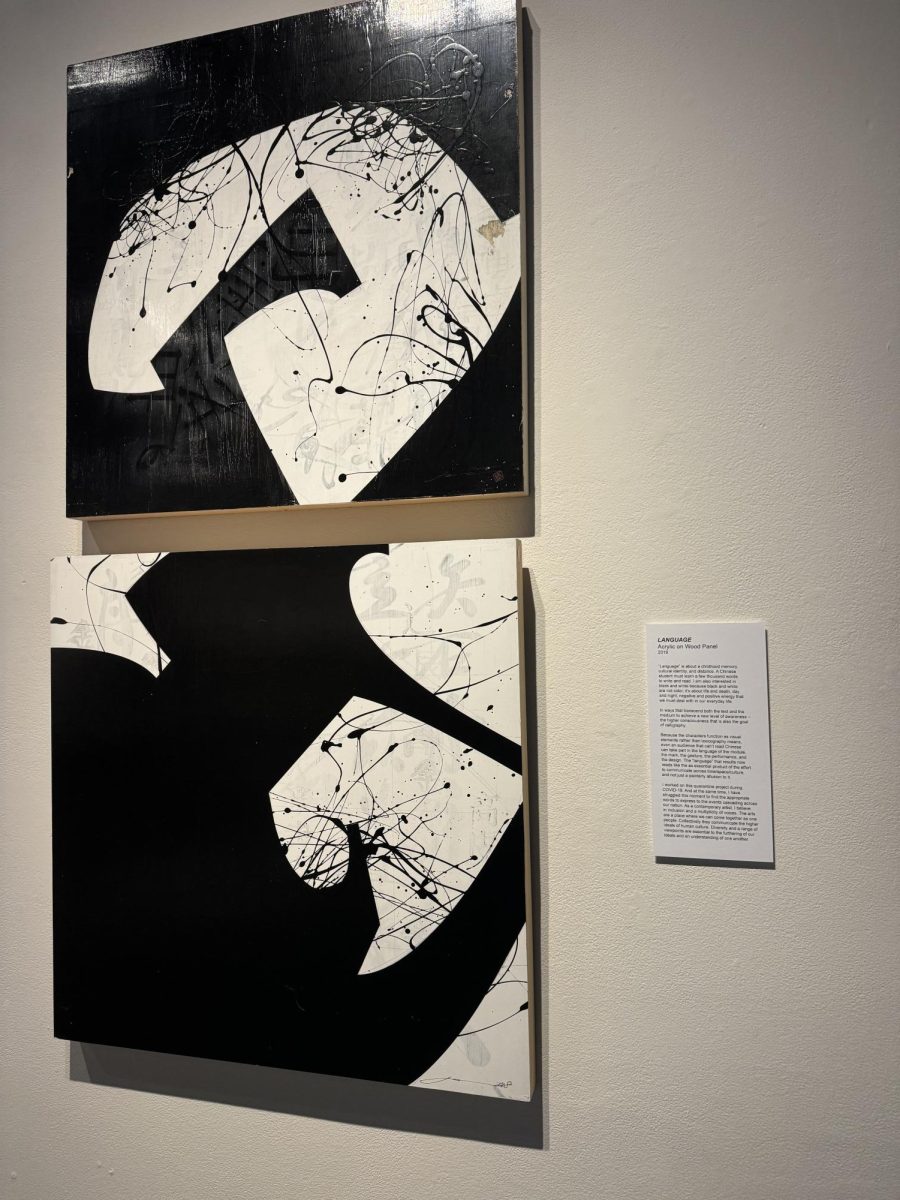

Photo courtesy ODU Libraries and the Center for Faculty Development, screenshot via Zoom.

Professors Kristi Costello and Jeremy Moody speak on a panel about AI integration into the classroom on Feb. 6, 2023.

February 17, 2023

“[AI] is not a fad. AI’s been a part of our lives for a while and we’re just becoming a little bit more conscious of it,” said Jay O’Toole, Assistant Professor of Management, answering the question raised by the title of the panel “Teaching and Learning with AI–Is It Just Another Fad?”

The public panel took place on Feb. 6 at the Perry Library and was moderated by M’Hammed Abdous of the Center of Faculty Development. It focused on the use of AI tools, like ChatGPT, by students and educators.

How Can Faculty Utilize AI?

The panel first tackled the question of how AI can help faculty with teaching and research. M’Hammed Abdous started off this section by sharing a link to Future Tools, a website which collects and organizes AI tools related to academia.

All four ODU educators on the panel seemed largely enthusiastic about the idea of AI integration into the classroom. Jeremy Moody, adjunct instructor of Philosophy and Religious Studies, pointed out that AI “takes so much of [the] legwork” out of teaching, citing AI that can create annotated bibliographies as an example. Professor Moody insisted that “we’re holding on to the way things were and not looking at…the way things could and probably should be.”

However, Professor Moody did not ignore the possibility of misinformation being spread through AI applications: “As scholars and academics, we have to make sure the information in our fields is correct. This is how we combat misinformation.” He shared that one assignment he gives his students is having them edit Wikipedia, and proposed that educators “and our students [can] help make [AI] better.”

Kristi Costello, associate professor of English, said she’d “like to see us kind of steer into ChatGPT and think about what do we actually value when we’re assigning students writing…how do we get them to learn what we want them to learn? Maybe that’s through writing and having local, contextualized writing assignments, but sometimes that might actually be not engaging them in writing…and that might be more oral exams and that might be more presentations and that might be more experiential work.”

“As a writing teacher, somebody who runs writing programs, I’m the first to admit that I think the ways we’ve been teaching writing are inefficient and kind of antiquated, and so I’m hoping that this is going to kind of propel us forward pedagogically,” Professor Costello continued. “My hope is that just by having these conversations we can think about, what is it that we value in learning? And how do we get that to our students?”

Professor O’Toole brought up the possibility of “… using AI as a generative process … I can think about asking my students to come up with a whole set of entrepreneurial ideas. You can use AI to help you generate that initial list of ideas. … But then, how do they deconstruct those ideas to be able to evaluate the efficacy of them?”

“If you want to use an AI tool to help you begin, I can support that,” Professor O’Toole said. “Because it’s through the revisions and the critical consciousness that you bring to your writing that makes sense of it.”

Multiple members of the panel acknowledged the usefulness of Al tools such as ChatGPT when generating the basis for formulaic pieces of writing, such as syllabi, rubrics, and, according to Sampath Jayarathna, Assistant Professor of Computer Science, “boilerplate code.”

How Can Students Utilize AI?

Professor Jayarathna brought up concerns about “isolated” distance learners and how AI can help them: “They don’t have that experience working in a classroom. … [AI] can provide them another avenue to get the answers and interact with another [person], even in an AI sense.” He also posited that AI provides a way for students to learn by “going back and forth like a tutorial.”

Professor Jayarathna concluded by advocating for AI as a supplement for help from a professor, saying, “at 3 o’clock in the morning, if a student has a question, the professor is not going to be there to answer that…so an AI can provide you the response, [then] the professor can go back and endorse or provide the correct solution.”

“ChatGPT can be used to help students polish their writing,” Professor Costello said. “You can put in an essay that you’ve written and say ‘ChatGPT, make my essay better.’ And while you can decide on your own what the ethical concerns are…this is what faculty have been asking writing center tutors to do for students forever, right? And frequently as a writing center director I’ve had to say, our goal is not to help your student create better writing, our goal is to help your student become a better writer. In some ways, faculty have been asking for this for a long time.”

Professor Costello stated that “Using ChatGPT in the classroom can also be a really interesting way to show our students their own voices, and to give them a sense of how they can write and what they can write that ChatGPT can’t do for them. … Talking about the affordances and limitations of the program in our classes might actually give some of our students some of the confidence that so many of them lack coming into our composition classes.”

Professor Costello also brought up that “ChatGPT could be a really useful tool for our students for whom English is an additional language.”

Ethical Concerns About AI in Academia

It seems that, wherever AI technology goes, ethical concerns follow, and the realm of academia is no different.

“One of the things we need to talk to our students about is, if they’re submitting work that was generated by something like ChatGPT, they are accountable for what has been put on the page,” Professor Costello said. “They’re accountable for its information, it’s misinformation. As of right now, ChatGPT has trouble synthesizing sources. It has trouble evaluating sources. A lot of the links are often dead links, so [we should be] making sure that students know that when they use this tool that they’re accountable for whatever it produces. And so it is their job to look at it critically and not passively.”

In the Q&A section at the end of the panel, a Zoom participant asked a question that has been weighing heavily on the minds of many educators: how can educators tell when a student turns in AI generated work? The panel brought up tools such as OpenAI and ZeroGPT, which purport to detect use of AI in text.

Professor Costello responded to an audience member who raised concerns about the over-reliance of students on AI tools to complete assignments. She suggested “using more of a flipped classroom model and doing more of that work that they used to do on their own together in the classroom. Doing more writing in the classroom, and, similar to math, showing their work.”

Another questioner asked about the possibility of ODU as a whole releasing a statement about the use of ChatGPT.

Professor Moody shared that in his classroom, he will follow a route of talking with his students so they “come to a consensus and agreement with me on whether or not we’re going to allow the use of it, how it can be used, and that gets then put into the syllabus;” though he noted his willingness to comply with an institutional-level statement when that is released.

M’Hammed Abdous confirmed that “we are working on drafting a statement that would be used. … It’s going to come through the Office of the Provost for Academic Affairs, basically sharing some of the language, the verbiage that can be included for our courses.”

The Takeaway

With the widespread release of tools such as ChatGPT, academia is now grappling with the same question that has daunted workers from manual laborers to artists: is AI going to replace humans? Professor Jayarathna says no. “It’s not gonna replace us. … It’s not gonna replace who we are and what humans are,” he said. “This is the first time that we’ve seen an AI tool that [can] really help the community in some way.”

Professor O’Toole summed up the spirit of the panel when he said, “I can’t tell you what the right answer is for your classroom. … I can give you examples of how I think about integrating [AI], but it’s going to be very unique to what you do and what you want to accomplish. … But I will say I feel pretty confident that the wrong answer here is just avoiding it.”